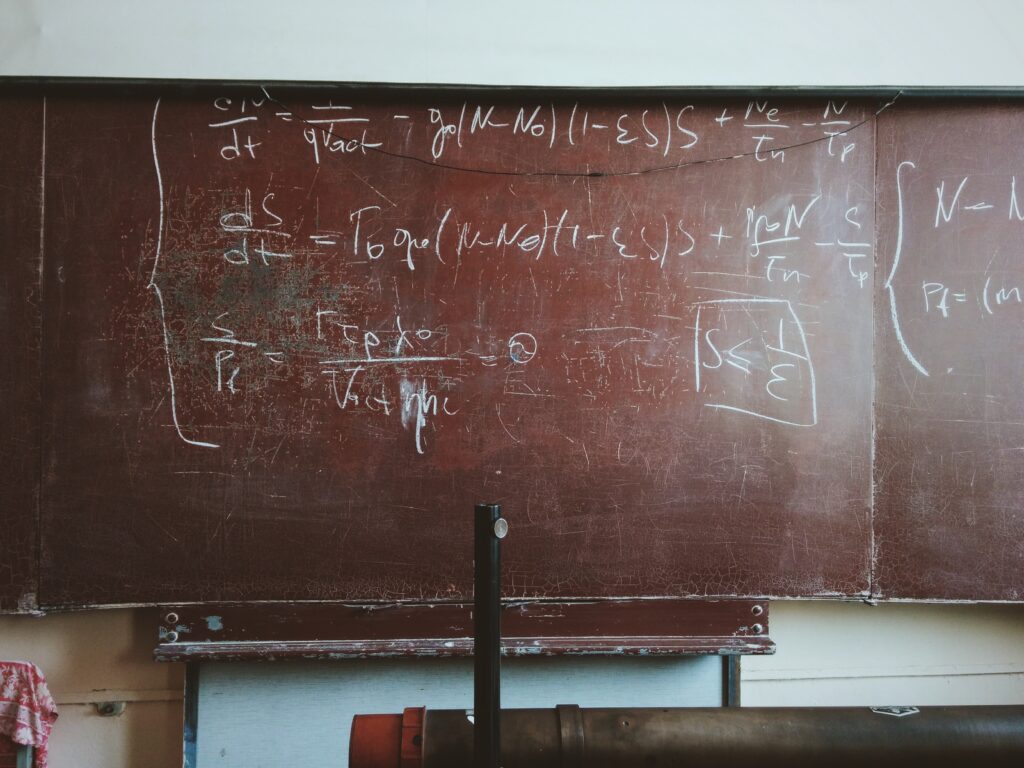

The problem of Complexity

BY UNDERSTANDING AND AVOIDING UNNECESSARY COMPLEXITY, THE POSSIBILITY OF ERRORS BECOMING COMPOUNDED OVER TIME CAN BE REDUCED

Assuming machines themselves are eventually responsible for producing an intelligence capable of truly generalised learning, then the developer’s role is actually a simple one – they just need to define learning rigorously enough for a computer to understand it as intended. Once accomplished, the algorithm could iteratively and independently improve its own ability to learn over generations. This iterative method of improvement means that – when developers are successful in programming a first generation learner – it is very possible that the algorithm itself could be surprisingly simple in nature. Indeed, there is a strong argument that keeping things simple from the outset would see the likelihood of future success far more likely.

ADVERTISEMENT

By avoiding unnecessary complexity in their models’ designs, developers mitigate the risk of errors creeping in which could influence their output over subsequent generations. The impact of such compounding is unlikely to be malicious or damaging in nature, however opacity around how the model actually produces an outcome – and whether the results can even be relied upon – makes the model unpredictable. This in turn makes it susceptible to misunderstanding or even misuse. In this sense, a simple algorithm comprising a few hundred lines of code, which is capable of producing much more detailed algorithms of its own, is far preferable to one where there is even the slightest doubt around how it produces a result. Iterative developments from even humble beginnings could easily be the first steps towards a general purpose learner if the theory around which it is built is rigorous enough.

So, if complexity can cause errors to sneak into a model early on – errors which could prove disastrous once compounded by iterative advancements over generations – then understanding and controlling its effect should dramatically improve our chances of developing an intelligent machine. Complexity itself falls into three buckets – space, time and human – each of which has the potential to limit or even scupper a model’s chances of success.

RELATED ARTICLES

CREATING ARTIFICIAL GENERAL INTELLIGENCE

WHY INSTILLING A MACHINE WITH THE ABILITY TO LEARN COULD PROVE TO BE THE FOUNDATIONS FOR A TRUE ARTIFICIAL GENERAL INTELLIGENCE

BY CHRISTOPHER KELLY